Poor ranking on international tests misleading about U.S. performance, new report finds

Socioeconomic inequality among U.S. students skews international comparisons of test scores, finds a new report released today by the Stanford Graduate School of Education and the Economic Policy Institute. When differences in countries' social class compositions are adequately taken into account, the performance of U.S. students in relation to students in other countries improves markedly.The report, What do international tests really show about U.S. student performance?, also details how errors in selecting sample populations of test-takers and arbitrary choices regarding test content contribute to results that appear to show U.S. students lagging.

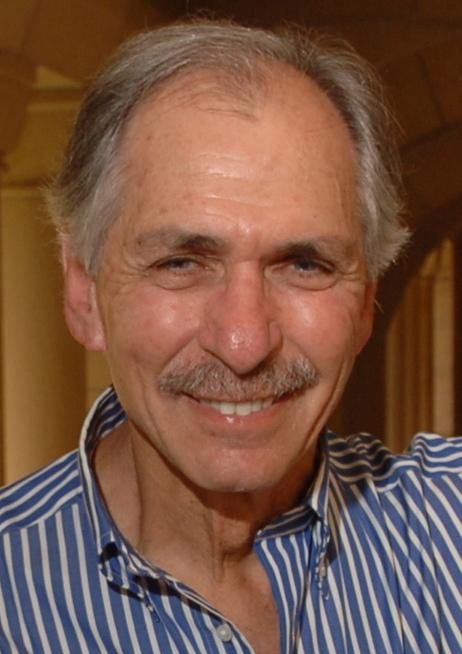

In conducting the research, report co-authors Martin Carnoy, a professor of education at Stanford, and Richard Rothstein, a research associate at the Economic Policy Institute, examined adolescent reading and mathematics results from four test series over the last decade, sorting scores by social class for the Program on International Student Assessment (PISA), the Trends in International Mathematics and Science Study (TIMSS), and two forms of the domestic National Assessment of Educational Progress (NAEP).

Based on their analysis, the co-authors found that average U.S. scores in reading and math on the PISA are low partly because a disproportionately greater share of U.S. students comes from disadvantaged social class groups, whose performance is relatively low in every country.

As part of the study, Carnoy and Rothstein calculated how international rankings on the most recent PISA might change if the United States had a social class composition similar to that of top-ranking nations: U.S. rankings would rise to sixth from 14th in reading and to 13th from 25th in math. The gap between U.S. students and those from the highest-achieving countries would be cut in half in reading and by at least a third in math.

"You can't compare nations' test scores without looking at the social class characteristics of students who take the test in different countries," said Carnoy. "Nations with more lower social class students will have lower overall scores, because these students don't perform as well academically, even in good schools. Policymakers should understand how our lower and higher social class students perform in comparison to similar students in other countries before recommending sweeping school reforms."

The report also found:

- There is an achievement gap between more and less disadvantaged students in every country; surprisingly, that gap is smaller in the United States than in similar post-industrial countries, and not much larger than in the very highest scoring countries.

- Achievement of U.S. disadvantaged students has been rising rapidly over time, while achievement of disadvantaged students in countries to which the United States is frequently unfavorably compared – Canada, Finland and Korea, for example – has been falling rapidly.

- But the highest social class students in United States do worse than their peers in other nations, and this gap widened from 2000 to 2009 on the PISA.

- U.S. PISA scores are depressed partly because of a sampling flaw resulting in a disproportionate number of students from high-poverty schools among the test-takers. About 40 percent of the PISA sample in the United States was drawn from schools where half or more of the students are eligible for the free lunch program, though only 32 percent of students nationwide attend such schools.

With each release of international test scores, many education leaders assert that American students are unprepared to compete in the new global economy, largely because of U.S. schools' shortcomings in educating disadvantaged students.

"Such conclusions are oversimplified, frequently exaggerated and misleading," said Rothstein, who is also senior fellow at the Chief Justice Earl Warren Institute of Law and Social Policy at the University of California – Berkeley School of Law. "They ignore the complexity of test results and may lead policymakers to pursue inappropriate and even harmful reforms."

Carnoy and Rothstein examined test results in detail from the United States and six other nations: three of the highest scorers (Canada, Finland and South Korea) and three economically comparable nations (France, Germany and the United Kingdom). In cases where U.S. states voluntarily participated in the TIMSS for 8th grade mathematics, the researchers compared trends in these states' scores with trends in 8th grade mathematics on the NAEP.

The researchers show that score trends on these different tests can be very inconsistent, suggesting need for greater caution in interpreting any single test. For example, declining trends in U.S. average PISA math scores do not track with trends in TIMSS and NAEP, which show substantial math improvements for all U.S. social classes.

Carnoy and Rothstein say that the differences in average scores on these tests reflect arbitrary decisions about content by the designers of the tests. For example, although it has been widely reported that U.S. 15-year-olds perform worse on average than students in Finland in mathematics, U.S. students perform better than students in Finland in algebra but worse in number properties (e.g., fractions). If algebra had greater weight in tests, and numbers less weight, test scores could show that U.S. overall performance was superior to that of Finland.

The report comes as the administrators of TIMSS are preparing to make public more detailed data underlying 2011 test results, following last month's release of average national scores. PISA plans to release detailed data on its 2012 test in December 2013. Carnoy said that he and Rothstein will then be able to supplement their present report by comparing the most recent TIMSS and PISA results by social class across countries. He invites other researchers to conduct similar analyses, to see if their findings confirm those of the present report.

A copy of the report is available to download at http://www.epi.org/publication/us-student-performance-testing/.

********************************************************************************************************************************

Jan. 29 update

Martin Carnoy and Richard Rothstein have revised certain numbers in their report, What do international tests really show about U.S. student performance?, after being made aware of more accurate information concerning the survey and timing of U.S. students taking the PISA test in 2009. The revisions do not change the report’s conclusions. As a result of the new information, the story above has been changed to reflect the following revisions. The U.S. rankings on the 2009 PISA test in reading and math would rise respectively to sixth (not fourth) from 14th and to 13th (not 10th) from 25th after controlling for social class differences and a sampling error by PISA and also after eliminating between-country differences that are statistically too small to meaninguflly affect a country's ratings. The change follows the authors revising a figure they used in calculating the sampling error: At the time the test was applied, 32 percent (not 23 percent) of U.S. students eligible for the free lunch program were in schools where more than half of the students qualify for this benefit. For a more in-depth explanation of the changes, as well as answers to other questions about the report, please see “Response from Martin Carnoy and Richard Rothstein to OECD/PISA comments.” The Economic Policy Institute has posted the revised version of the report on its website.